LavinMQ Prefetch

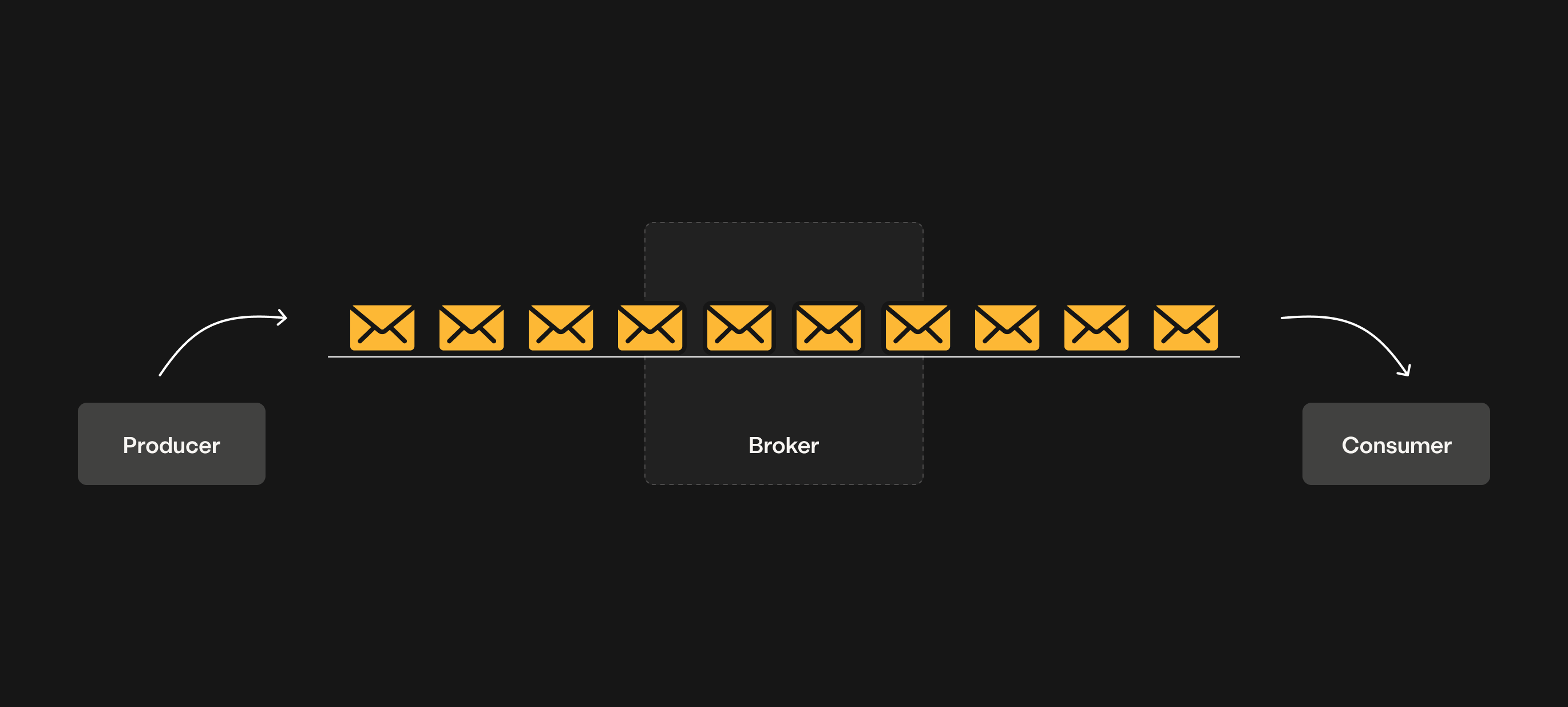

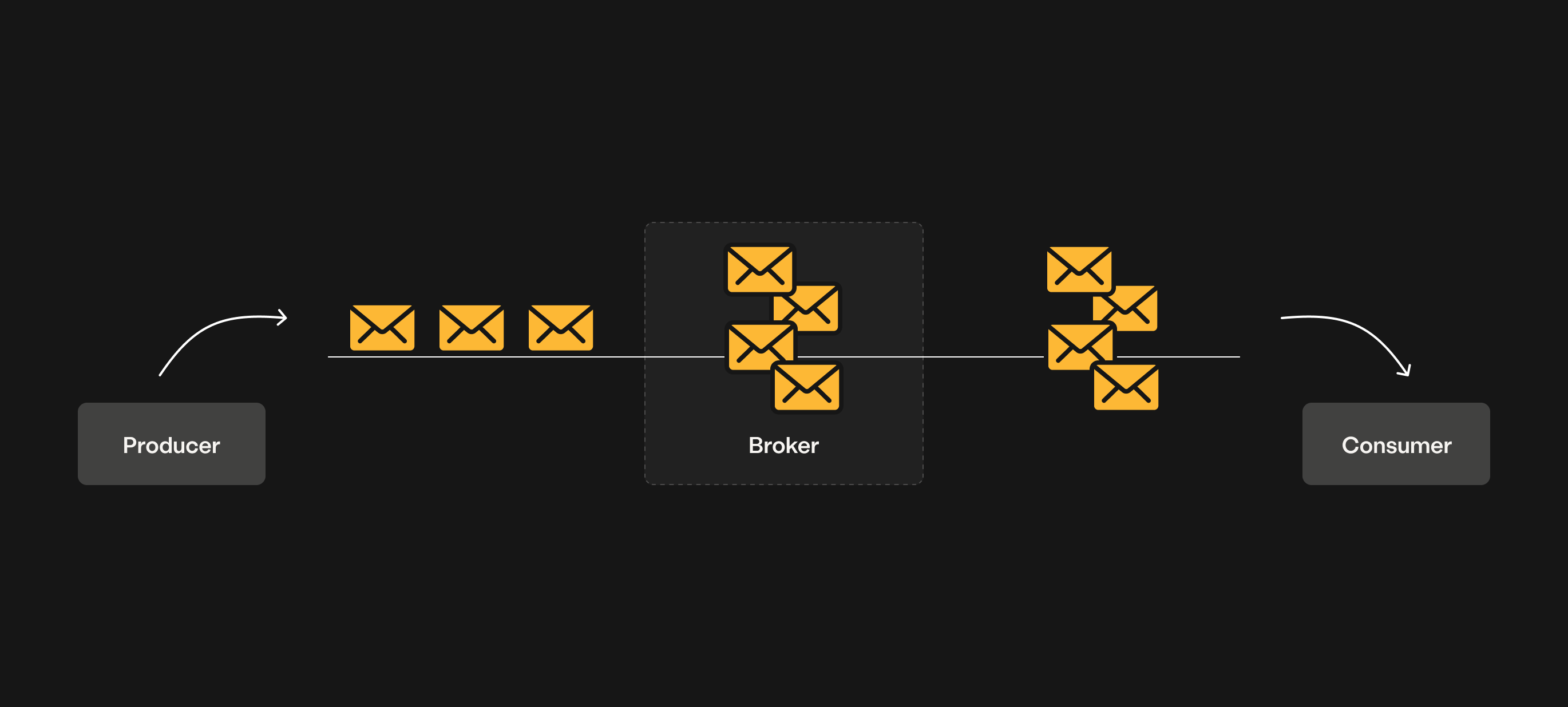

Prefetch controls how many messages a consumer can receive before acknowledging them. Once a consumer receives the specified number of unacknowledged prefetched messages, the broker pauses delivery until at least one message is acknowledged.

LavinMQ prefetch controls how many messages a consumer can receive before acknowledging them, preventing messages from piling up at the client. By limiting unacknowledged messages, it ensures consumers process data efficiently without getting overwhelmed. This keeps all consumers actively engaged, balancing the workload and optimizing resource usage.

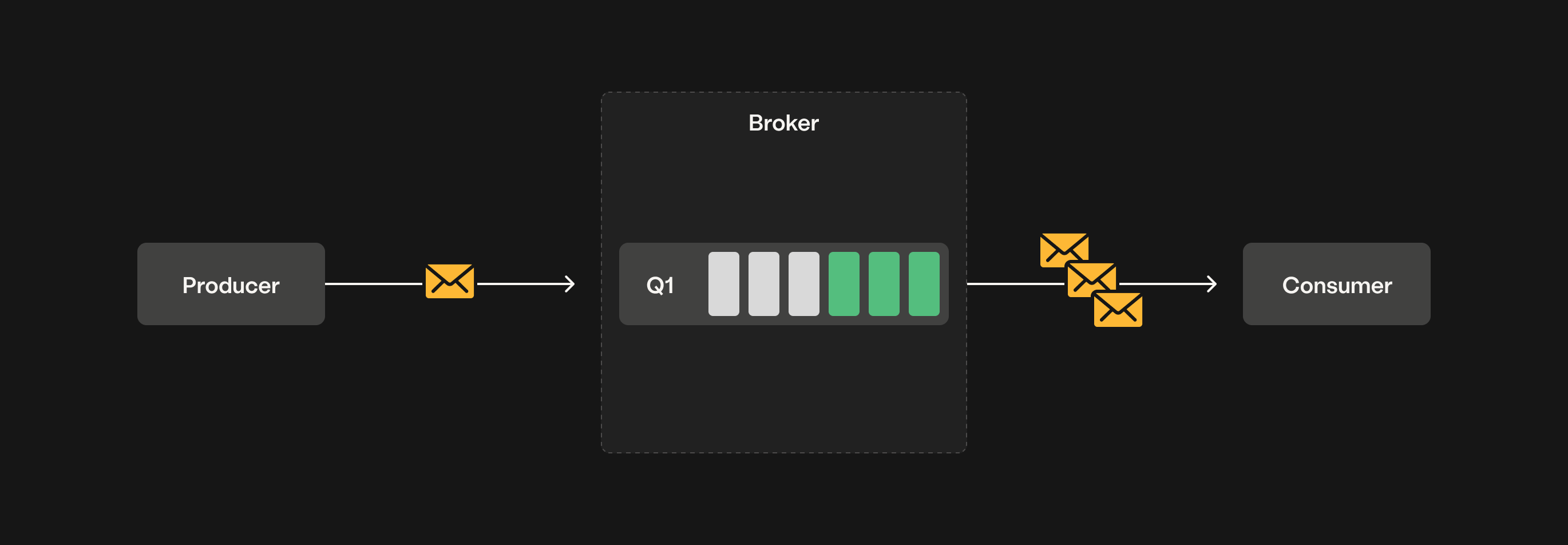

LavinMQ offers two types of prefetch options:

- Channel Prefetch – This option limits the number of unacknowledged messages across all channels. It helps control the overall message flow within the connection.

- Consumer Prefetch – This option limits the number of unacknowledged messages per consumer.

Prefetch settings apply only when consuming messages from a queue (not when using the get method) and only if explicit acknowledgments are required. Pre-fetched messages are not re-queued or delivered to other consumers; they remain assigned to the current consumer and are marked as unacknowledged until processed.

By default, LavinMQ sets the prefetch limit to 65,535 (2¹⁶) messages. This value can be configured using the default_consumer_prefetch setting.

Using prefetch

The basic.qos channel property (Quality of Service) sets the prefetch count. The basic_qos method includes a global flag. Setting this flag to:

falseapplies the prefetch count to each consumer individually (consumer prefetch).trueapplies the prefetch count to all consumers on the channel (channel prefetch).

An unbounded prefetch can be set by basic_qos(0).

Example: Limit the number of unacknowledged messages per consumer to three.

import pika

connection = pika.BlockingConnection(pika.ConnectionParameters('localhost'))

channel = connection.channel()

channel.queue_declare(queue='task_queue', durable=True)

channel.basic_qos(prefetch_count=3, global=False)

Looking for help on setting the prefetch value? Check out our blog, “How to set the correct prefetch value in LavinMQ”, for more information.

Ready to take the next steps?

Managed LavinMQ instance via CloudAMQP

LavinMQ has been built with performance and ease of use in mind - we've benchmarked a throughput of about 1,000,000 messages/sec . You can try LavinMQ without any installation hassle by creating a free instance on CloudAMQP. Signing up is a breeze.

Get started with CloudAMQP ->

Help and feedback

We welcome your feedback and are eager to address any questions you may have about this piece or using LavinMQ. Join our Slack channel to connect with us directly. You can also find LavinMQ on GitHub.